Listen Here:

In this episode, we’re diving into the biggest problem plaguing long-running LLM deployments: context drift and brevity bias. Your models start strong, but they decay over time, demanding costly retraining and frustrating MLOps teams. Static prompt engineering is a dead end.

Today, we’re unlocking Agentic Context Engineering, or ACE—the future of production AI. Detailed in an essential article by the experts at Diztel, ACE is not just a better prompt; it’s a fully operational system layer. It allows your LLMs to learn through adaptive memory and evolve instructions automatically, just like a human team member.

🚀Stop Marketing to the General Public. Talk to Enterprise AI Builders.

Your platform solves the hardest challenge in tech: getting secure, compliant AI into production at scale.

But are you reaching the right 1%?

AI Unraveled is the single destination for senior enterprise leaders—CTOs, VPs of Engineering, and MLOps heads—who need production-ready solutions like yours. They tune in for deep, uncompromised technical insight.

We have reserved a limited number of mid-roll ad spots for companies focused on high-stakes, governed AI infrastructure. This is not spray-and-pray advertising; it is a direct line to your most valuable buyers.

Don’t wait for your competition to claim the remaining airtime. Secure your high-impact package immediately.

Secure Your Mid-Roll Spot: https://buy.stripe.com/4gMaEWcEpggWdr49kC0sU09

Key Takeaways:

1. Instead of static prompts, Agentic Context Engineering (ACE) enables LLMs to “learn” through instructions, examples, and adaptive memory.

2. ACE directly tackles context drift and brevity bias, the two biggest killers of long-running AI performance.

3. Think of ACE as turning prompt engineering into a system layer — a pipeline that evolves and curates instructions automatically.

4. The real power of the framework? Evaluation and self-reflection are its core primitives, making it possible to benchmark and auto-improve agents over time, reducing the need for retraining.

5. ACE can integrate into enterprise MLOps pipelines as a governance layer, giving teams visibility into how context evolves, and offering levers for optimization without costly model retraining.

Tools / Tech : ACE (Agentic Context Engineering) is a conceptual framework with its own modular components:

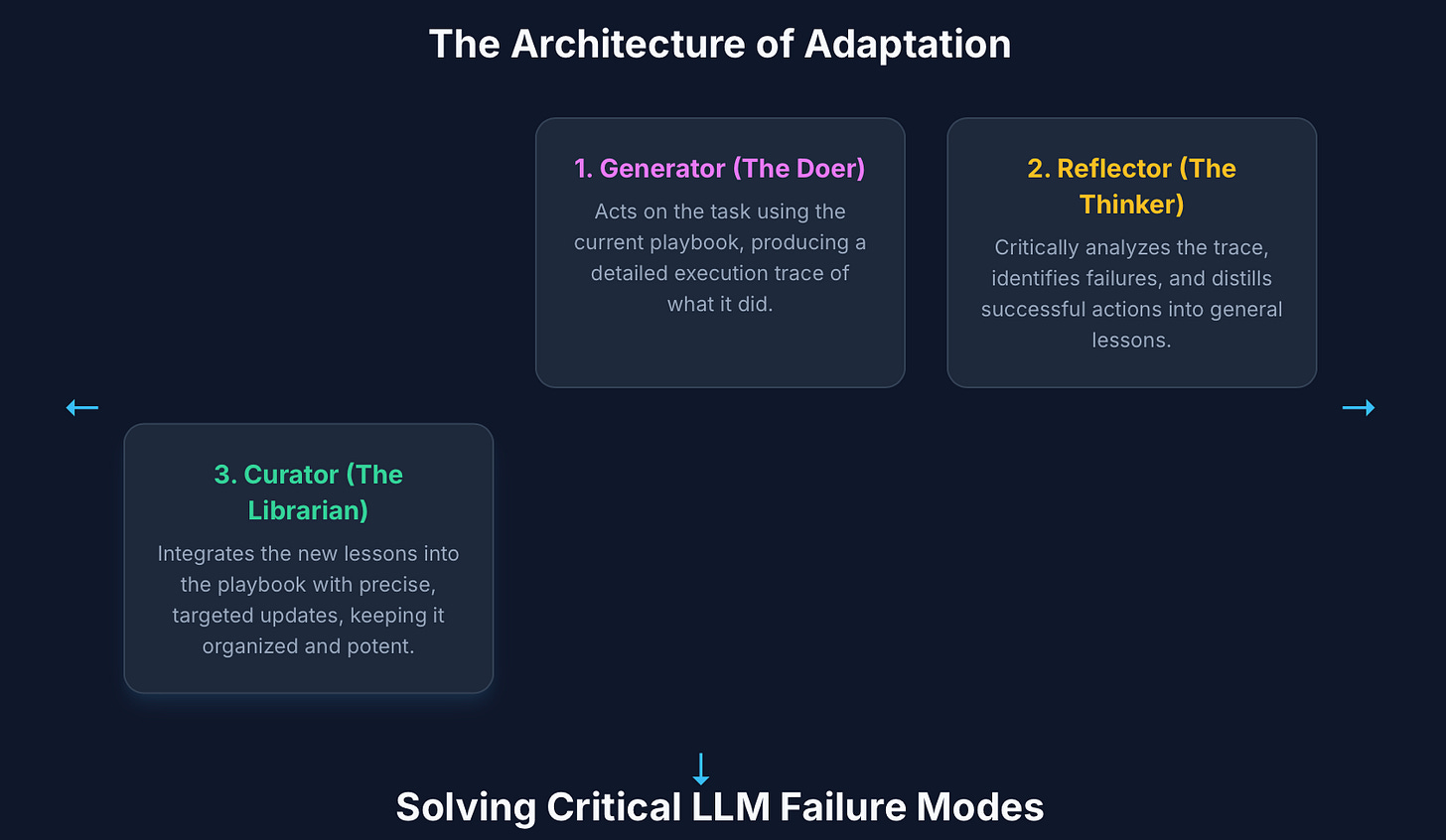

Generator → Creates new prompts, instructions, or candidate outputs.

Reflector → Evaluates those outputs, identifies weaknesses, and provides structured feedback.

Curator → Selects, organizes, and evolves the best instructions into a coherent, long-term context.

“ACE doesn’t just prompt models — it teaches them how to remember, reflect, and evolve without retraining.”

Source (Diztel): https://diztel.com/ai/agentic-context-engineering-ace-the-future-of-ai-is-here/

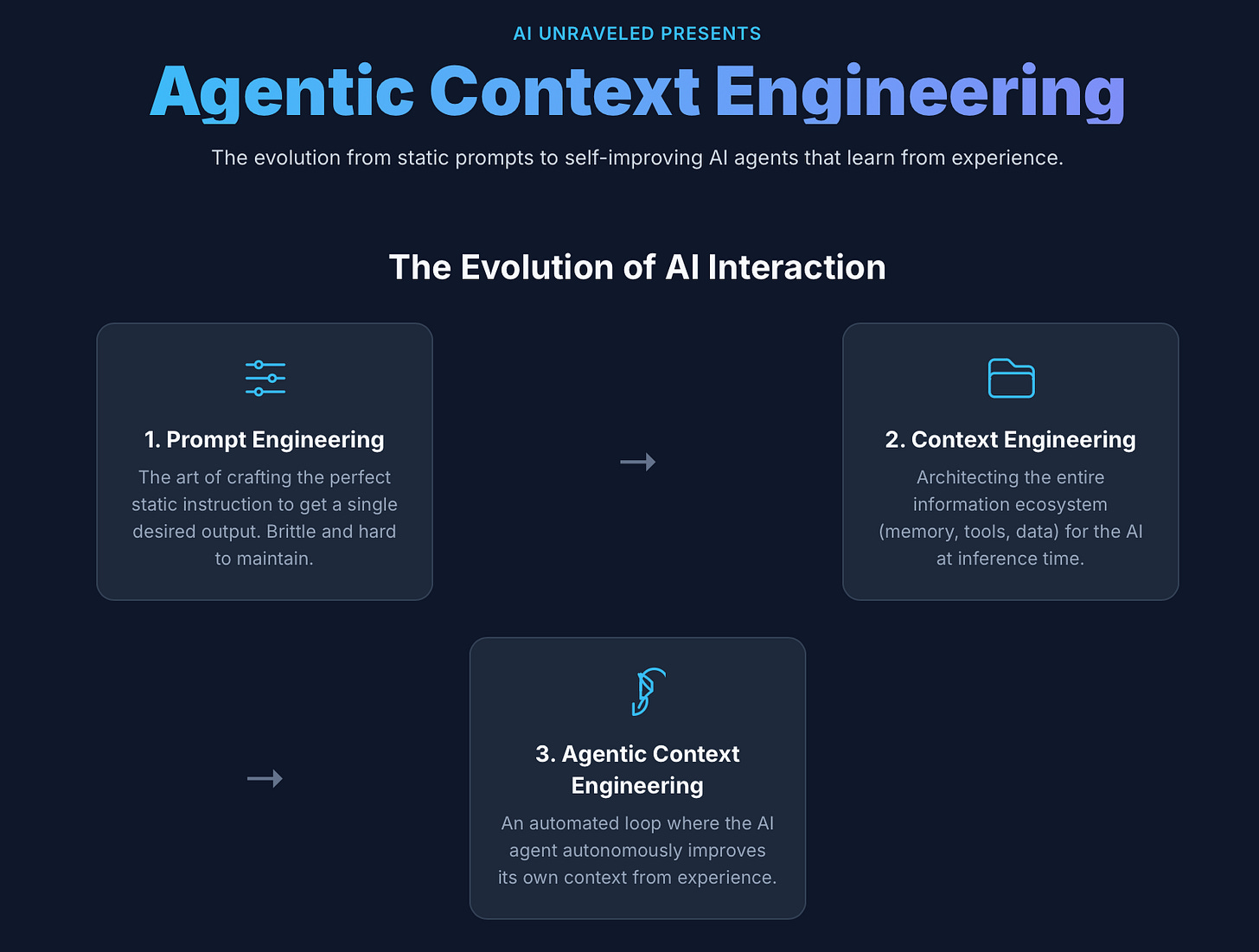

Part I: The Evolution of AI Interaction - Beyond the Prompt

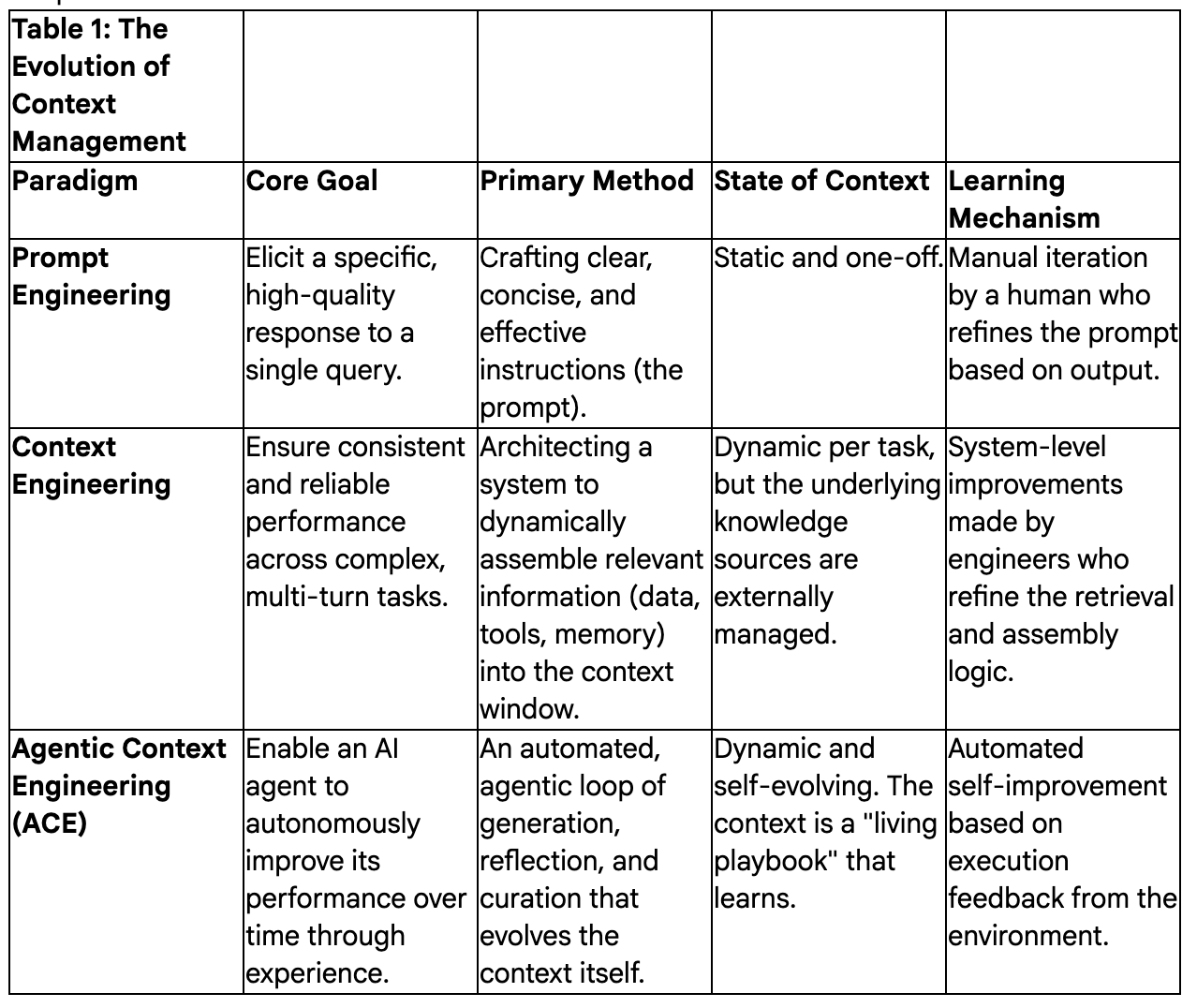

The journey toward more capable artificial intelligence is intrinsically linked to the evolution of how we communicate with it. What began as a simple command-line interaction has blossomed into a complex dance of instruction, data, and feedback. To understand the significance of recent breakthroughs like Agentic Context Engineering (ACE), one must first trace this path from crafting static instructions to architecting dynamic, intelligent information ecosystems.

Section 1: From Static Instructions to Dynamic Conversations

1.1 The Era of Prompt Engineering: The Art of the Ask

The initial method for interacting with Large Language Models (LLMs) was prompt engineering, a craft focused on designing the perfect, often concise, instruction to elicit a desired response.1 In this early phase, precision was paramount; every token in the prompt was deliberate, and the goal was to achieve a specific, one-off output, such as generating copywriting variations or a single block of code.1

As models grew more sophisticated, so did the techniques. Practitioners moved beyond simple commands to incorporate more complex strategies like chain-of-thought guidance to structure the model’s reasoning, few-shot examples to provide concrete illustrations, and detailed contextual hints to ground the model in a specific domain.2 However, this expansion created a new challenge: “context overload.” Prompts ballooned from disciplined, focused instructions into elaborate, multi-part narratives that were brittle and difficult to maintain.2 The fundamental limitation of this approach remained its static nature. Once written, the prompt was a fixed instruction; it could not learn from success or failure, and debugging a poor output often amounted to little more than “rewording and guessing” what went wrong.1

1.2 The Rise of Context Engineering: Building the Information Ecosystem

The pressure to manage these increasingly unwieldy prompts gave rise to a more systematic discipline: context engineering. This represented a conceptual leap from focusing on a single instruction to architecting the entire information environment that an LLM observes at the moment of inference.4 The central idea shifted from what you ask to what the model knows when you ask it.1

Context engineering recognizes that an LLM’s performance is a function of all the information it is given. This “context packet” is a dynamically assembled package that can include a variety of components:

System Instructions: High-level directives defining the AI’s role, goals, and constraints.7

Memory: Both short-term memory (e.g., recent conversation history) and long-term memory (e.g., user preferences stored in a database).7

Retrieved Knowledge: External documents or data fetched in real-time, often through Retrieval-Augmented Generation (RAG), to provide factual grounding.7

Tool Definitions: Descriptions of external tools or APIs the model can use to act upon the world.7

User Query: The immediate question or task from the user.7

The goal of context engineering is to orchestrate these elements, ensuring the model receives the right information in the optimal format at precisely the right moment.4 This approach improved the robustness and repeatability of AI systems, but a subtle limitation persisted. The context itself, while dynamically assembled, was not inherently self-improving. The rules for assembling the context were external, and the knowledge within it did not evolve based on the AI’s own performance.2

This evolution from prompt to context engineering signals a deeper shift in how we conceptualize LLMs. Early prompt engineering treated the model like a “calculator for words”—a transactional tool where a carefully framed input produces a desired output. Context engineering, conversely, treats the model as a “reasoning engine.” It acknowledges that for any complex, multi-step task, the model is not merely executing a command but is reasoning over a body of information. The quality of this reasoning is therefore directly bottlenecked by the quality, relevance, and structure of the information it can access.5 This elevates the required skill set from clever wordsmithing to a form of information systems design.1

However, this paradigm still encounters a performance ceiling that cannot be overcome simply by increasing a model’s size or the length of its context window. Research and practical application have shown that models can suffer from a “lost-in-the-middle” problem, where their ability to recall and utilize information degrades as the context grows longer.10 A static context, no matter how well-engineered at the outset, cannot adapt to unforeseen errors or novel situations that arise during a task because it lacks an intrinsic feedback loop. A new architectural approach was needed—one that could make the context itself intelligent and adaptive.

Section 2: The Dawn of Agentic AI

The limitations of static context became particularly acute with the emergence of agentic AI. This new class of AI systems represents a fundamental departure from the reactive models that preceded them, demanding a correspondingly advanced approach to information management.

2.1 Defining “Agentic”: More Than a Chatbot

Agentic AI refers to autonomous systems that can perceive their environment, set goals, create plans, and execute multi-step tasks with limited or no human intervention.12 The term “agentic” signifies agency—the capacity to act independently and purposefully to achieve an objective.14 Unlike a traditional chatbot that primarily responds to commands, an AI agent is a proactive problem-solver.16

The core capabilities of an agentic system can be broken down into a continuous loop 13:

Perception: Gathering data from the environment through sensors, APIs, databases, or user interactions.

Reasoning: Using an LLM as a “brain” to analyze the gathered data, understand the context, and formulate potential strategies.

Planning: Breaking down a high-level goal into a sequence of concrete, executable steps.

Action: Executing these steps, often by calling external tools, interacting with other systems, or generating responses.

Learning: Evaluating the outcome of its actions and adapting its future behavior.

This shift from a “knowledge worker assistant” to an “autonomous knowledge worker” has profound implications. Where a generative AI tool might assist a human in writing a report, an agentic system could be tasked with producing the report from start to finish: conducting the research, analyzing the data, drafting the text, creating visualizations, and distributing the final product. This vision of an “agentic organization,” where entire workflows are managed by collaborative teams of humans and AI agents, necessitates a robust mechanism for autonomous learning and adaptation.18

2.2 Why Agentic Systems Demand a New Approach to Context

The autonomy of agentic systems makes effective context management a mission-critical requirement. When an agent operates over a long sequence of steps, making its own decisions about which tools to use and what actions to take, the quality of its context directly determines its success or failure. Poor context management in an autonomous system does not just lead to a bad answer; it leads to incorrect actions, wasted resources, and a complete failure to achieve the goal.20

A primary challenge that arises is context bloat. As an agent interacts with its environment—calling tools, reading files, receiving observations—its context window rapidly fills with information. Much of this information may be relevant for one step but becomes noise in subsequent steps. This accumulation of irrelevant data degrades the model’s reasoning ability, increases latency, and drives up computational costs.10 The engineering challenge, therefore, is to create a system that can not only assemble relevant context but also intelligently prune, compress, and, most importantly, learn from it. This is the precise problem that Agentic Context Engineering was designed to solve.

Part II: Unraveling Agentic Context Engineering (ACE)

Agentic Context Engineering (ACE) is a novel framework developed by researchers at Stanford University, SambaNova Systems, and UC Berkeley that provides a direct solution to the challenges of creating truly adaptive, self-improving AI agents.23 It introduces a new paradigm for AI learning that is more efficient, transparent, and scalable than traditional methods.

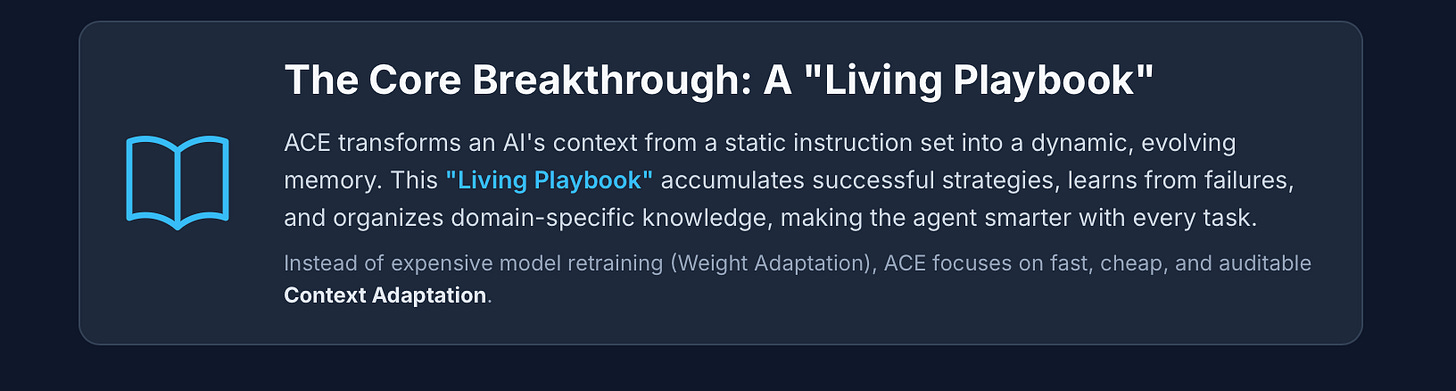

Section 3: The Core Breakthrough: AI That Learns from Experience

3.1 Introducing ACE: The Living Playbook

At its core, ACE is a framework that enables LLMs to improve their performance by systematically refining their own input context, rather than by altering their underlying neural network weights.25 The central metaphor for this process is the transformation of the context from a static set of instructions into a “living playbook”.3

This playbook is a structured, evolving memory that accumulates and organizes successful strategies, domain-specific heuristics, and lessons learned from past failures.25 With each task the AI performs, it receives feedback from its environment—such as a successful code execution or a validation error. The ACE framework uses this feedback to meticulously update the playbook, making the AI smarter and more effective for the next task.26 It is, in essence, a system for institutionalizing experience directly within the agent’s operational memory.

3.2 The Paradigm Shift: From Weight Adaptation to Context Adaptation

Traditionally, improving an AI model’s capabilities has meant weight adaptation—a process of retraining or fine-tuning the model’s billions of parameters on a new dataset. This process is notoriously slow, computationally expensive, and requires vast amounts of labeled data.25

ACE champions context adaptation, which offers several distinct advantages 26:

Interpretability and Control: Because the playbook is stored as human-readable text, developers can directly inspect, audit, and even manually edit the knowledge the AI has learned. This transparency is critical for debugging, ensuring safety, and building trust in autonomous systems.26

Efficiency and Cost: Modifying a text-based context is orders of magnitude faster and cheaper than a full retraining cycle. The ACE framework has been shown to reduce the time it takes for an agent to adapt by over 80%.25

Agility and Unlearning: Context can be updated in near real-time to incorporate new information. This also enables “selective unlearning”—the ability to precisely remove outdated or incorrect knowledge from the playbook. This capability is crucial for maintaining privacy, ensuring compliance with regulations like GDPR, and correcting model errors without a full reset.29

This approach represents a fundamental architectural choice: decoupling the reasoning engine (the LLM) from its operational knowledge base (the playbook). It posits that a powerful reasoning engine can be made vastly more effective not by constantly rewiring its “brain,” but by providing it with a superior, continuously improving knowledge system. This modularity is a hallmark of robust and scalable engineering.

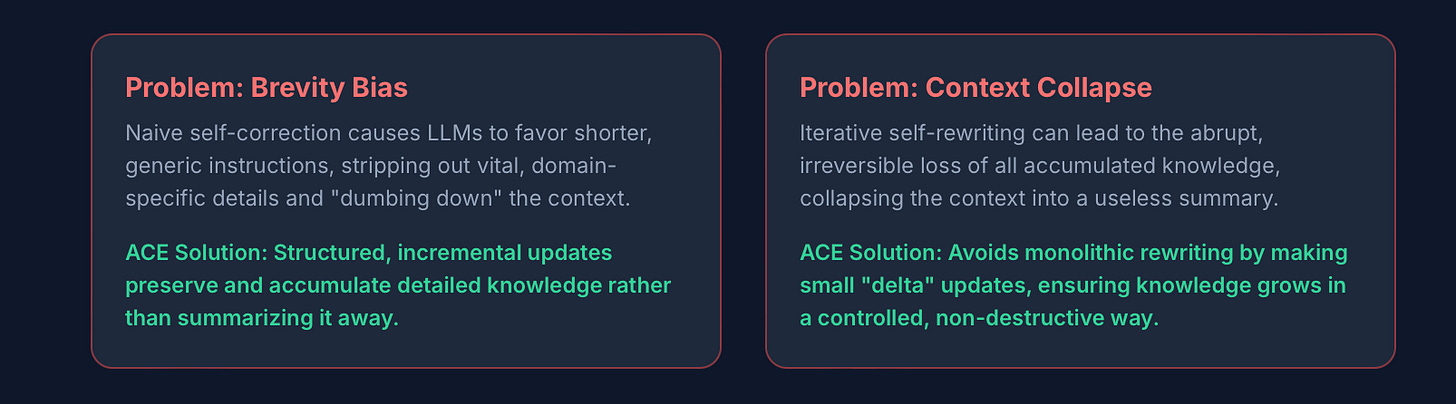

Section 4: Solving the Achilles’ Heel of Modern LLMs: Brevity Bias and Context Collapse

The ACE framework was specifically designed to overcome two critical failure modes that plague less structured methods of context improvement: brevity bias and context collapse.27 These issues arise from the naive use of LLMs to revise their own instructions and can lead to a paradoxical situation where attempts at self-improvement result in catastrophic performance degradation.

4.1 The Problem of Brevity Bias

Brevity bias is the tendency for automated context optimization methods to favor shorter, more generic instructions at the expense of vital, domain-specific detail.27 When an LLM is tasked with monolithically rewriting a prompt to “improve” it, it often defaults to summarization, conflating conciseness with quality. This process can strip out the nuanced heuristics and specific strategies that are essential for high performance on complex tasks, leaving the agent with a less informative, dumbed-down set of instructions.3

ACE directly counters this bias. It is built on the observation that LLMs, unlike humans, often benefit from long and detailed contexts, as they possess the ability to autonomously identify and extract the most relevant information at inference time.27 By using a structured, incremental update process, ACE ensures that valuable, detailed knowledge is preserved and accumulated rather than being summarized away.25

4.2 The Danger of Context Collapse

An even more severe failure mode is context collapse. This occurs when an agent’s iterative, monolithic rewriting of its own memory leads to the abrupt and irreversible loss of accumulated knowledge.26 In this scenario, the context erodes over time until it “collapses” into a short, generic, and ultimately useless summary. The hard-won lessons from previous interactions are simply wiped away.26

ACE’s architecture is the direct antidote to this problem. It avoids monolithic rewriting entirely. Instead, its structured update mechanism makes small, precise “delta” updates—adding a new strategy, refining an existing one, or pruning a redundant entry.25 This approach ensures that the playbook grows and improves in a controlled, non-destructive manner, preserving the integrity of its accumulated knowledge over time.25

These two problems are not inherent flaws in LLMs themselves, but rather emergent properties of unstructured, autonomous self-modification. They reveal the danger of tasking an AI to improve itself without providing the necessary engineering guardrails. The solution offered by ACE is the imposition of sound data management principles—modularity, structured data, and versioned, incremental updates—onto the process of AI self-reflection, making it safe and productive.

Section 5: The Architecture of Adaptation: Generator, Reflector, and Curator

To achieve structured self-improvement, ACE employs a modular workflow that divides the learning process among three cooperative roles: the Generator, the Reflector, and the Curator.27 This division of labor allows for functional specialization and creates a robust, continuous feedback loop. While these are distinct roles, they are typically all performed by the same underlying LLM, which is guided by different system prompts for each role.3

5.1 The Generator: The Doer

The Generator’s function is to act. Using the current version of the playbook as its guide, it attempts to solve the task at hand.27 In doing so, it produces a detailed execution trace, which includes its reasoning steps, the tools it used, and the final output.3 This trace serves as the raw data for the learning process, clearly showing which strategies from the playbook were effective and where failures or errors occurred.29 In the system’s cognitive loop, the Generator is the “student” attempting the exam.27

5.2 The Reflector: The Thinker

The Reflector’s function is to analyze. It takes the execution trace from the Generator and critically evaluates it.3 Its goal is to perform a root-cause analysis of any failures and to distill successful actions into generalizable principles or heuristics.3 It identifies what went wrong, why it went wrong, and proposes specific, actionable lessons in natural language.27 This process can be iterative, with the Reflector refining its insights over several rounds to produce the most precise and useful feedback.27 The Reflector is the “teacher” grading the exam and providing constructive comments.27

5.3 The Curator: The Librarian

The Curator’s function is to integrate. It takes the actionable lessons generated by the Reflector and carefully incorporates them into the playbook.27 This is done not by rewriting the entire document, but by making targeted delta updates: adding new bullet points, editing existing ones to be more precise, or merging redundant entries.3 The Curator is the “librarian,” ensuring the playbook remains organized, up-to-date, and free of clutter.27

To manage the long-term health of the playbook, the Curator employs a “Grow-and-Refine” mechanism. In the “Grow” phase, new insights are appended as distinct entries, each with a unique identifier. In the periodic “Refine” phase, the Curator de-duplicates the playbook, using techniques like semantic embedding comparison to find and merge similar or redundant entries, thus keeping the context concise and potent.27

This three-part architecture is, in essence, an implementation of metacognition—or “thinking about thinking.” It formalizes the human learning process of acting (Generate), evaluating performance (Reflect), and updating mental models (Curate). This suggests that a key path to more advanced AI lies not just in scaling computational power, but in designing more sophisticated cognitive architectures that enable efficient and robust learning from experience.

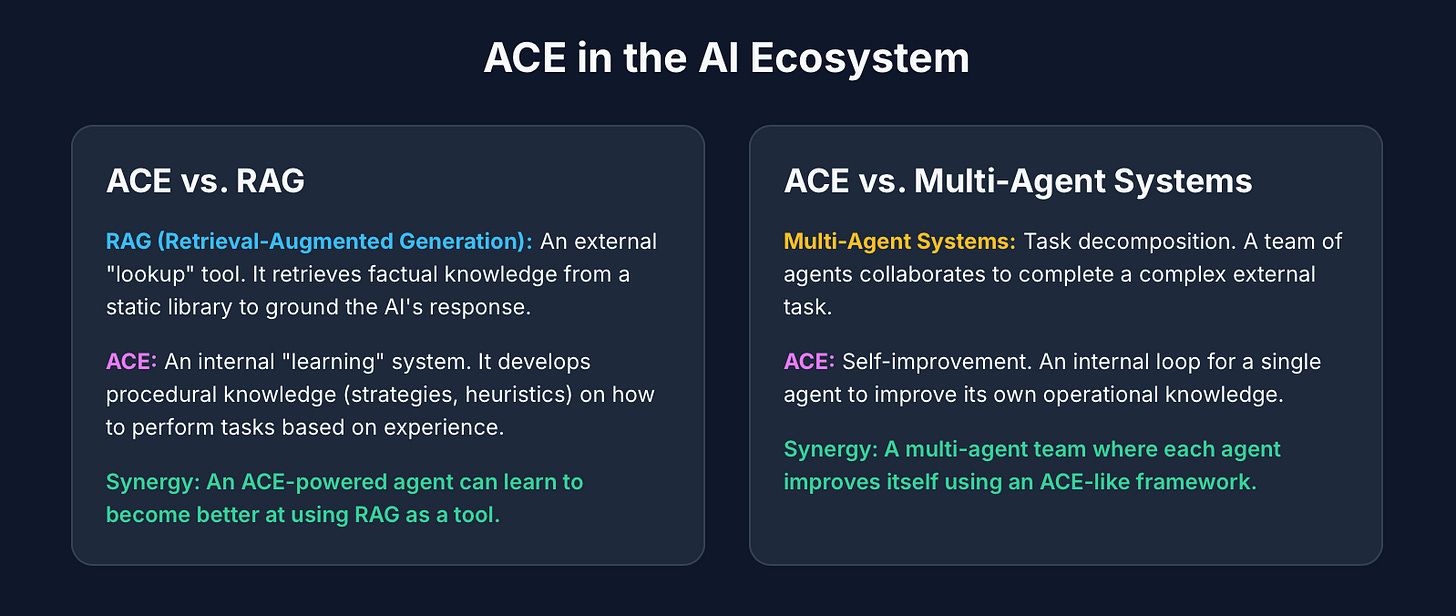

Part III: ACE in the Ecosystem - A Comparative Analysis

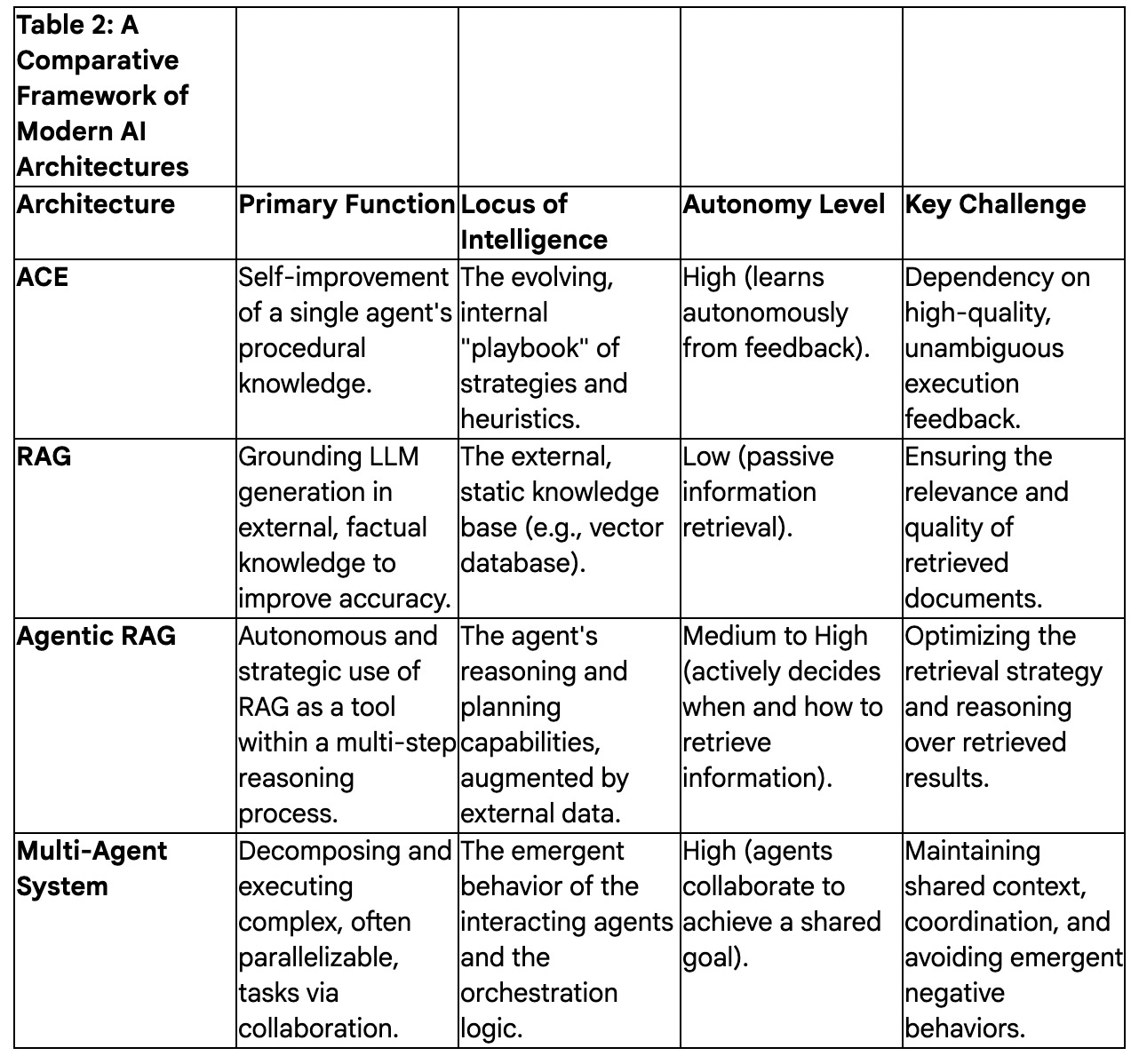

To fully appreciate the unique contribution of Agentic Context Engineering, it is essential to situate it within the broader landscape of advanced AI techniques. ACE is not a replacement for other powerful paradigms like Retrieval-Augmented Generation (RAG) or multi-agent systems; rather, it is a complementary technology that addresses a different part of the overall architectural challenge.

Section 6: ACE vs. Retrieval-Augmented Generation (RAG)

6.1 RAG Explained: Augmenting with External Knowledge

Retrieval-Augmented Generation (RAG) is a technique designed to combat LLM hallucinations and provide models with access to up-to-date, factual information.17 The process is straightforward: before generating a response, the system first retrieves relevant documents or data snippets from an external knowledge base (such as a corporate wiki or a technical manual stored in a vector database). This retrieved information is then passed to the LLM along with the user’s query, effectively “grounding” the model’s response in a specific, verifiable source.33

6.2 Key Differences: Internal Learning vs. External Lookup

The primary distinction between ACE and RAG lies in their function and the nature of the knowledge they manage.

Locus of Knowledge: RAG deals with external, factual knowledge. The knowledge base is a static library that the agent consults. ACE, in contrast, develops an internal, procedural knowledge base. Its playbook contains learned strategies, heuristics, and best practices—knowledge about how to perform tasks, not just what the facts are.3

Learning Mechanism: RAG is fundamentally a passive retrieval system. It is a highly effective “lookup” tool, but the knowledge base itself does not learn or adapt based on how it is used or whether the final task succeeds.33 ACE is an active learning system. Its entire purpose is to dynamically evolve its internal playbook based on performance feedback.33

6.3 The Synergy: Agentic RAG

Rather than being competitors, ACE and RAG are highly complementary. The convergence of these ideas has led to the concept of Agentic RAG.32 In an Agentic RAG system, the AI agent is not just a passive recipient of retrieved information. Instead, it actively and intelligently manages the retrieval process as part of its reasoning loop. It might learn to refine its search queries, decide which of several knowledge bases to consult for a given problem, or re-rank retrieved results based on learned heuristics.17

An ACE-powered agent could use RAG as one of its key tools. The ACE playbook might evolve to contain strategies specifically about how to use RAG more effectively. For instance, after failing a task due to outdated information, the Reflector might generate an insight like, “When researching recent events, reformulate the RAG query to include the current year,” which the Curator would then add to the playbook. In this way, the agent learns to become a more skilled researcher, leveraging its external library with growing expertise.

Section 7: ACE vs. Multi-Agent Systems

7.1 Multi-Agent Systems Explained: Collaborative Task Execution

Multi-agent systems are designed to tackle complex problems by decomposing them into smaller subtasks that can be assigned to a team of specialized, autonomous agents.35 This “divide and conquer” approach is particularly effective for tasks that can be parallelized, such as conducting broad research across multiple topics simultaneously.37 A common architectural pattern is the “orchestrator-worker” model, where a lead agent plans the overall task and delegates specific sub-problems to a team of worker agents, synthesizing their results to produce a final output.35

7.2 Key Differences: Self-Improvement vs. Task Decomposition

While ACE’s internal architecture uses three distinct “roles,” it is fundamentally different from a general multi-agent system.

Architectural Goal: The purpose of ACE’s three-part structure is the self-improvement of a single agent’s internal context. The Generator, Reflector, and Curator are part of an introspective learning loop. The purpose of a multi-agent system is the completion of a complex external task through collaboration and task decomposition.

Context Management: In the ACE framework, the context (the playbook) is the object being refined. In a multi-agent system, context is a critical resource that must be shared between agents to ensure coordinated and coherent action. Indeed, the primary challenge and failure mode in multi-agent systems is maintaining a shared context, or “context fabric,” so that agents do not work at cross-purposes or duplicate effort.35

Ultimately, these paradigms are not mutually exclusive but are orthogonal components of a more sophisticated, future AI architecture. A truly advanced system might consist of a multi-agent team (the organizational structure) where each individual agent has access to external knowledge via RAG tools (the library) and improves its own performance over time using an ACE-like internal learning loop (the brain).

Part IV: The Tangible Impact - Performance, Applications, and Implementation

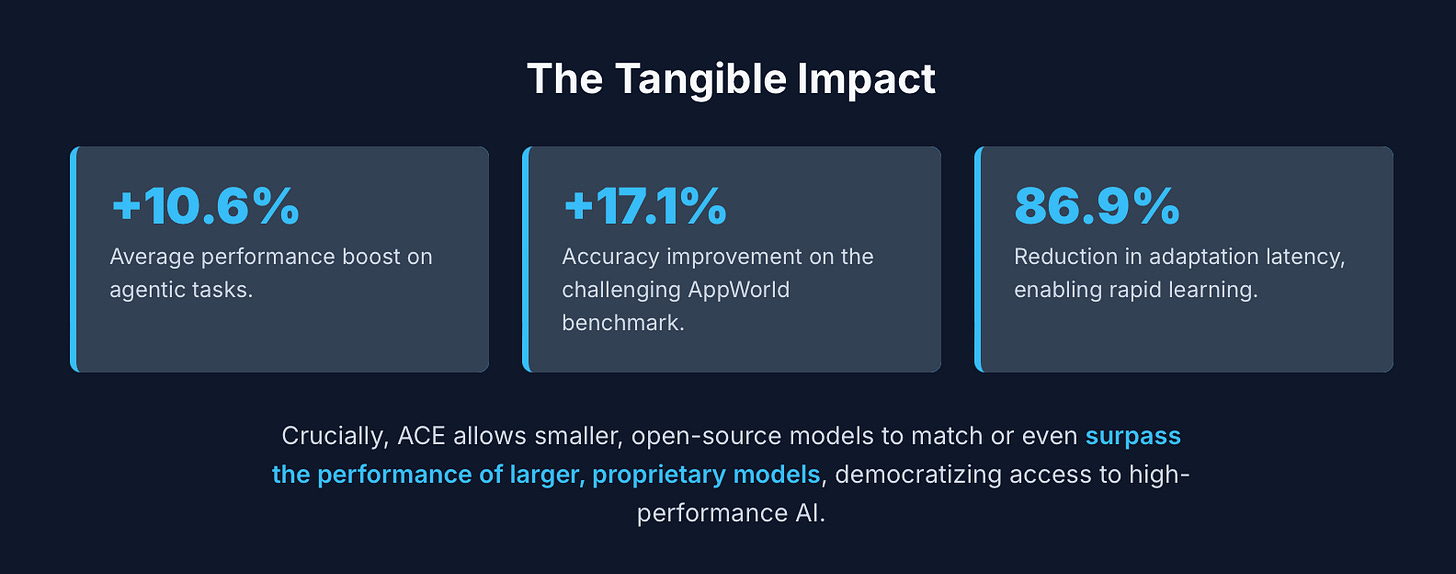

While the architectural concepts behind ACE are compelling, its true significance lies in its demonstrated, empirical impact on performance, efficiency, and the democratization of AI capabilities. The framework moves beyond theoretical promise to deliver measurable gains in real-world scenarios.

Section 8: The Empirical Evidence: A Leap in Performance and Efficiency

The Stanford-led research rigorously benchmarked the ACE framework, revealing substantial improvements across multiple domains.

8.1 Performance Gains on Complex Benchmarks

Agentic Tasks: When applied to tasks requiring an AI agent to interact with software applications, ACE delivered a consistent and significant performance boost, showing an average gain of +10.6% over strong baseline methods.25 In the challenging AppWorld benchmark, which tests an agent’s ability to operate real-world applications, ACE improved accuracy by as much as 17.1%.25

Domain-Specific Reasoning: In the domain of finance, which requires understanding complex, structured documents and specialized terminology, ACE enabled agents to construct comprehensive playbooks of domain-specific concepts. This resulted in an average performance increase of +8.6% on difficult financial reasoning tasks.25

Democratizing High Performance: Perhaps the most striking result was ACE’s ability to level the playing field. When implemented with a smaller, open-source model (DeepSeek-V3.1), the ACE-powered agent was able to match, and on harder test splits even surpass, the performance of the top-ranked production-level agent on the AppWorld leaderboard, which was powered by a much larger, proprietary model (IBM’s CUGA using GPT-4.1).23 This suggests that a superior learning architecture can compensate for a raw deficit in model size, potentially disrupting the current market dynamics where performance is often equated with parameter count.

8.2 Radical Improvements in Efficiency

Beyond raw performance, ACE demonstrated dramatic gains in the efficiency of the adaptation process itself.

Reduced Latency: By replacing slow, monolithic context rewriting with fast, incremental delta updates, ACE was able to reduce adaptation latency by an average of 86.9%.25 This means the agent can learn and improve much more rapidly.

Lower Cost: This speed translates directly to lower costs. The ACE method requires fewer computational rollouts and consumes fewer tokens, making the process of continuous self-improvement economically sustainable.25

8.3 Learning Without Labels

A critical feature of the ACE framework is its ability to learn effectively without requiring a manually curated dataset of “correct answers.” It can improve its playbook by leveraging natural execution feedback and environmental signals—such as whether a piece of code ran successfully, a unit test passed, or a validation check was met.23 This capacity for unsupervised or self-supervised learning from interaction is a key prerequisite for building truly autonomous, continuously improving AI systems.

Section 9: From the Lab to the Real World: Practical Applications of ACE

The principles of Agentic Context Engineering are not limited to academic benchmarks; they have direct applications across a wide range of industries.

Software Development and DevOps: An ACE-powered coding assistant could build a rich playbook of best practices tailored to a specific codebase. For example, after generating code that causes a performance bottleneck, the Reflector could analyze the profiling data and generate a lesson: “For this repository, prefer vectorized NumPy operations over Python for-loops for array manipulation.” The Curator would add this to the playbook, ensuring future code generation is more efficient.13 This aligns with the vision of creating reliable AI workflows that understand and respect a project’s unique architecture and conventions.41

Finance and Regulated Industries: A financial analysis agent tasked with extracting data from quarterly earnings reports could learn to navigate the unique formatting of different companies. After failing to find a key metric, its playbook could be updated with a new rule: “For Company XYZ’s 10-Q filings, the ‘Net Revenue’ figure is located in footnote 4, not the main table.” This kind of specific, evolving knowledge is invaluable for accuracy.13 The transparent, auditable nature of the text-based playbook is also a major asset for compliance and regulatory oversight.30

Customer Support and Incident Response: In a customer support context, an agent could learn the most effective troubleshooting sequence for a new product bug. After a human agent resolves a ticket, the ACE framework could analyze the transcript, extract the successful steps, and add them to the playbook, allowing the AI to handle similar issues autonomously in the future.4 For on-call engineers, an agentic system can learn from every system incident, dynamically updating its runbooks and preserving critical institutional knowledge that might otherwise be lost when an employee leaves the team.43

Section 10: The Road Ahead: Challenges, Limitations, and the Future of Agentic Systems

Despite its promise, ACE is not a silver bullet. Its implementation comes with its own set of challenges, and it represents one piece of a much larger puzzle in the quest for production-grade agentic AI.

10.1 Challenges and Limitations

Dependency on High-Quality Feedback: ACE’s learning cycle is powered by feedback. Its ability to improve is therefore fundamentally constrained by the quality and clarity of the execution signals it receives from its environment. In domains where success or failure is ambiguous or difficult to measure, the Reflector may struggle to distill useful lessons, potentially degrading performance.27

Brittleness Across Models: A playbook is a collection of heuristics that work well for a specific underlying LLM. A strategy optimized for one model may not transfer effectively to a new, different model with its own unique inductive biases. This creates a potential maintenance challenge, as playbooks may need to be re-adapted when an organization upgrades its core foundation models.11

Context Management Remains a Hard Problem: While ACE provides a sophisticated framework for evolving the content of the context, the engineering challenges of managing the context window itself persist. Issues of cost, latency, and the risk of “context bloat” are mitigated but not eliminated.10 Context engineering, as a discipline, remains a critical and ongoing area of work.

10.2 The Future is Context-Aware: The “Agentic Organization”

Looking forward, the principles embodied by ACE are foundational to the next paradigm of enterprise AI. The strategic goal is shifting from deploying isolated AI tools to building what can be termed the “agentic organization”.18 This vision involves a fundamental reimagining of business processes to be AI-first, with autonomous agents managing end-to-end workflows and humans moving “above the loop” to provide strategic direction, oversight, and exception handling.18

In this future, success will be defined less by the raw power of a company’s AI models and more by the robustness of the “AgenticOps” infrastructure built around them.8 This new discipline, analogous to DevOps for traditional software, will encompass the entire lifecycle of testing, monitoring, securing, and optimizing AI agents in production. It will require investment not just in the “brains” (the models), but in the entire “nervous system” that allows them to operate reliably at scale: the workflow engines, the monitoring tools, the governance frameworks, and, critically, the adaptive learning architectures like ACE that allow them to grow smarter with every action they take.

Works cited

Context Engineering vs Prompt Engineering | by Mehul Gupta | Data Science in Your Pocket, accessed on October 16, 2025, https://medium.com/data-science-in-your-pocket/context-engineering-vs-prompt-engineering-379e9622e19d

Agentic Context Engineering: Prompting Strikes Back - Super-Agentic AI Blog, accessed on October 16, 2025, https://shashikantjagtap.net/agentic-context-engineering-prompting-strikes-back/

Agentic Context Engineering: Prompting Strikes Back | by Shashi Jagtap | Superagentic AI, accessed on October 16, 2025, https://medium.com/superagentic-ai/agentic-context-engineering-prompting-strikes-back-c5beade49acc

Context Engineering: The Future of AI Development - Voiceflow, accessed on October 16, 2025, https://www.voiceflow.com/blog/context-engineering

The rise of “context engineering” - LangChain Blog, accessed on October 16, 2025, https://blog.langchain.com/the-rise-of-context-engineering/

Everybody is talking about how context engineering is replacing prompt engineering nowadays. But what really is this new buzzword? : r/AI_Agents - Reddit, accessed on October 16, 2025, https://www.reddit.com/r/AI_Agents/comments/1mq935t/everybody_is_talking_about_how_context/

Context Engineering Guide, accessed on October 16, 2025, https://www.promptingguide.ai/guides/context-engineering-guide

Agentic AI Engineering: The Blueprint for Production-Grade AI Agents | by Yi Zhou - Medium, accessed on October 16, 2025, https://medium.com/generative-ai-revolution-ai-native-transformation/agentic-ai-engineering-the-blueprint-for-production-grade-ai-agents-20358468b0b1

Context Engineering - What it is, and techniques to consider - LlamaIndex, accessed on October 16, 2025, https://www.llamaindex.ai/blog/context-engineering-what-it-is-and-techniques-to-consider

Context Engineering for AI Agents: Lessons from Building Manus, accessed on October 16, 2025, https://manus.im/blog/Context-Engineering-for-AI-Agents-Lessons-from-Building-Manus

Agent Optimization: Why Context Engineering Isn’t Enough ..., accessed on October 16, 2025, https://super-agentic.ai/resources/super-posts/agent-optimization-why-context-engineering-isnt-enough/

en.wikipedia.org, accessed on October 16, 2025, https://en.wikipedia.org/wiki/Agentic_AI

What is agentic AI? Definition and differentiators - Google Cloud, accessed on October 16, 2025, https://cloud.google.com/discover/what-is-agentic-ai

What is Agentic AI? - AWS, accessed on October 16, 2025, https://aws.amazon.com/what-is/agentic-ai/

What is Agentic AI? | IBM, accessed on October 16, 2025, https://www.ibm.com/think/topics/agentic-ai

What is Agentic AI? Benefits, Challenges, and Implementation Strategy - Logic20/20, accessed on October 16, 2025, https://logic2020.com/insight/what-is-agentic-ai-benefits-challenges-implementation/

Traditional RAG vs. Agentic RAG—Why AI Agents Need Dynamic Knowledge to Get Smarter | NVIDIA Technical Blog, accessed on October 16, 2025, https://developer.nvidia.com/blog/traditional-rag-vs-agentic-rag-why-ai-agents-need-dynamic-knowledge-to-get-smarter/

Seizing the agentic AI advantage | McKinsey, accessed on October 16, 2025, https://www.mckinsey.com/capabilities/quantumblack/our-insights/seizing-the-agentic-ai-advantage

The agentic organization: A new operating model for AI | McKinsey, accessed on October 16, 2025, https://www.mckinsey.com/capabilities/people-and-organizational-performance/our-insights/the-agentic-organization-contours-of-the-next-paradigm-for-the-ai-era

Context Engineering for AI Agents: Building Smarter, More Aware Machines, accessed on October 16, 2025, https://insights.daffodilsw.com/blog/context-engineering-for-ai-agents

Context Engineering: The Secret to High-Performing Agentic AI - Multimodal, accessed on October 16, 2025, https://www.multimodal.dev/post/context-engineering

How to Perform Effective Agentic Context Engineering | Towards Data Science, accessed on October 16, 2025, https://towardsdatascience.com/how-to-perform-effective-agentic-context-engineering/

[2510.04618] Agentic Context Engineering: Evolving Contexts for Self-Improving Language Models - arXiv, accessed on October 16, 2025, https://www.arxiv.org/abs/2510.04618

Agentic Context Engineering (ACE): Self-Improving LLMs via Evolving Contexts, Not Fine-Tuning : r/machinelearningnews - Reddit, accessed on October 16, 2025, https://www.reddit.com/r/machinelearningnews/comments/1o2ynr1/agentic_context_engineering_ace_selfimproving/

Agentic Context Engineering: Evolving Contexts for Self-Improving Language Models - arXiv, accessed on October 16, 2025, https://arxiv.org/html/2510.04618v1

Agentic Context Engineering (ACE): The Future of AI is here - Diztel, accessed on October 16, 2025, https://diztel.com/ai/agentic-context-engineering-ace-the-future-of-ai-is-here/

Agentic Context Engineering for Evolving LLMs - Emergent Mind, accessed on October 16, 2025, https://www.emergentmind.com/papers/2510.04618

Agentic Context Engineering: Learning Comprehensive Contexts for Self-Improving Language Models | OpenReview, accessed on October 16, 2025, https://openreview.net/forum?id=eC4ygDs02R

Is Fine-Tuning Dead? Discover Agentic Context Engineering for Model Evolution Without Fine-Tuning - 36氪, accessed on October 16, 2025, https://eu.36kr.com/en/p/3504237709859976

Agentic Context Engineering: Evolving Contexts for Self-Improving Language Models - YouTube, accessed on October 16, 2025,

@Stanford just proved you don’t need to fine-tune an AI model to make it smarter: +10.6% over GPT-4 agents w/ zero retraining : r/singularity - Reddit, accessed on October 16, 2025, https://www.reddit.com/r/singularity/comments/1o4ln9n/stanford_just_proved_you_dont_need_to_finetune_an/

Agentic Retrieval-Augmented Generation: A Survey on Agentic RAG - arXiv, accessed on October 16, 2025, https://arxiv.org/html/2501.09136v1

Agentic AI vs RAG - A Handy Guide - Ampcome, accessed on October 16, 2025, https://www.ampcome.com/post/agentic-ai-vs-rag

What is Agentic RAG? | IBM, accessed on October 16, 2025, https://www.ibm.com/think/topics/agentic-rag

Context Engineering & Multi-Agent Strategy, accessed on October 16, 2025,

https://www.forwardfuture.ai/p/context-engineering-multi-agent-strategy

How to Build Multi Agent AI Systems With Context Engineering - Vellum AI, accessed on October 16, 2025, https://www.vellum.ai/blog/multi-agent-systems-building-with-context-engineering

How and when to build multi-agent systems - LangChain Blog, accessed on October 16, 2025, https://blog.langchain.com/how-and-when-to-build-multi-agent-systems/

Context Engineering for Agentic Applications - Open Data Science, accessed on October 16, 2025, https://opendatascience.com/context-engineering-for-agentic-applications/

Architecting Smarter Multi-Agent Systems with Context Engineering - OneReach, accessed on October 16, 2025, https://onereach.ai/blog/smarter-context-engineering-multi-agent-systems/

Cluster444/agentic: An agentic workflow tool that provides context engineering support for opencode - GitHub, accessed on October 16, 2025, https://github.com/Cluster444/agentic

How to build reliable AI workflows with agentic primitives and context engineering, accessed on October 16, 2025, https://github.blog/ai-and-ml/github-copilot/how-to-build-reliable-ai-workflows-with-agentic-primitives-and-context-engineering/?utm_source=blog-release-oct-2025&utm_campaign=agentic-copilot-cli-launch-2025

coleam00/context-engineering-intro: Context engineering is the new vibe coding - it’s the way to actually make AI coding assistants work. Claude Code is the best for this so that’s what this repo is centered around, but you can apply this strategy with any AI coding assistant! - GitHub, accessed on October 16, 2025, https://github.com/coleam00/context-engineering-intro

The Top 5 Benefits of Agentic AI in On-call Engineering - Resolve.ai, accessed on October 16, 2025, https://resolve.ai/blog/Top-5-Benefits